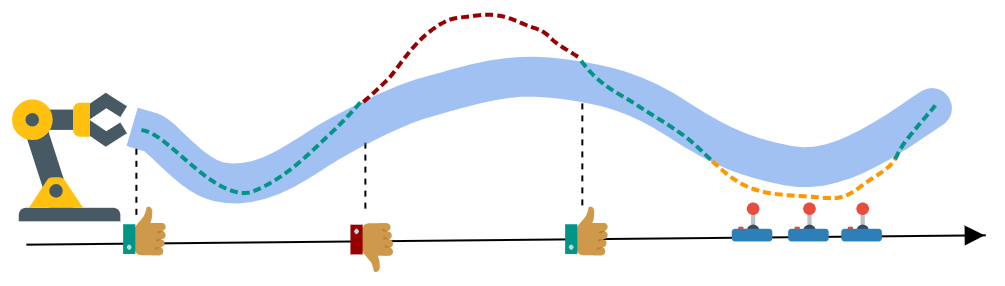

Learning to solve complex manipulation tasks from visual observations is a dominant challenge for real-world robot learning. Deep reinforcement learning algorithms have recently demonstrated impressive results, although they still require an impractical amount of time-consuming trial-and-error iterations. In this work, we consider the promising alternative paradigm of interactive learning where a human teacher provides feedback to the policy during execution, as opposed to imitation learning where a pre-collected dataset of perfect demonstrations is used. Our proposed CEILing (Corrective and Evaluative Interactive Learning) framework combines both corrective and evaluative feedback from the teacher to train a stochastic policy in an asynchronous manner, and employs a dedicated mechanism to trade off human corrections with the robot’s own experience. We present results obtained with our framework in extensive simulation and real-world experiments that demonstrate that CEILing can effectively solve complex robot manipulation tasks directly from raw images in less than one hour of real-world training.

Correct Me if I am Wrong: Interactive Learning for Robotic Manipulation

Eugenio Chisari, Tim Welschehold, Joschka Boedecker, Wolfram Burgard, and Abhinav Valada